Mobile Application

Some Documentation for the Mobile App needed

General

General Documentation like diagrams, tasks and roles

FotoFaces

Some Documentation for the FotoFaces API and algorithms

Functional Requirements

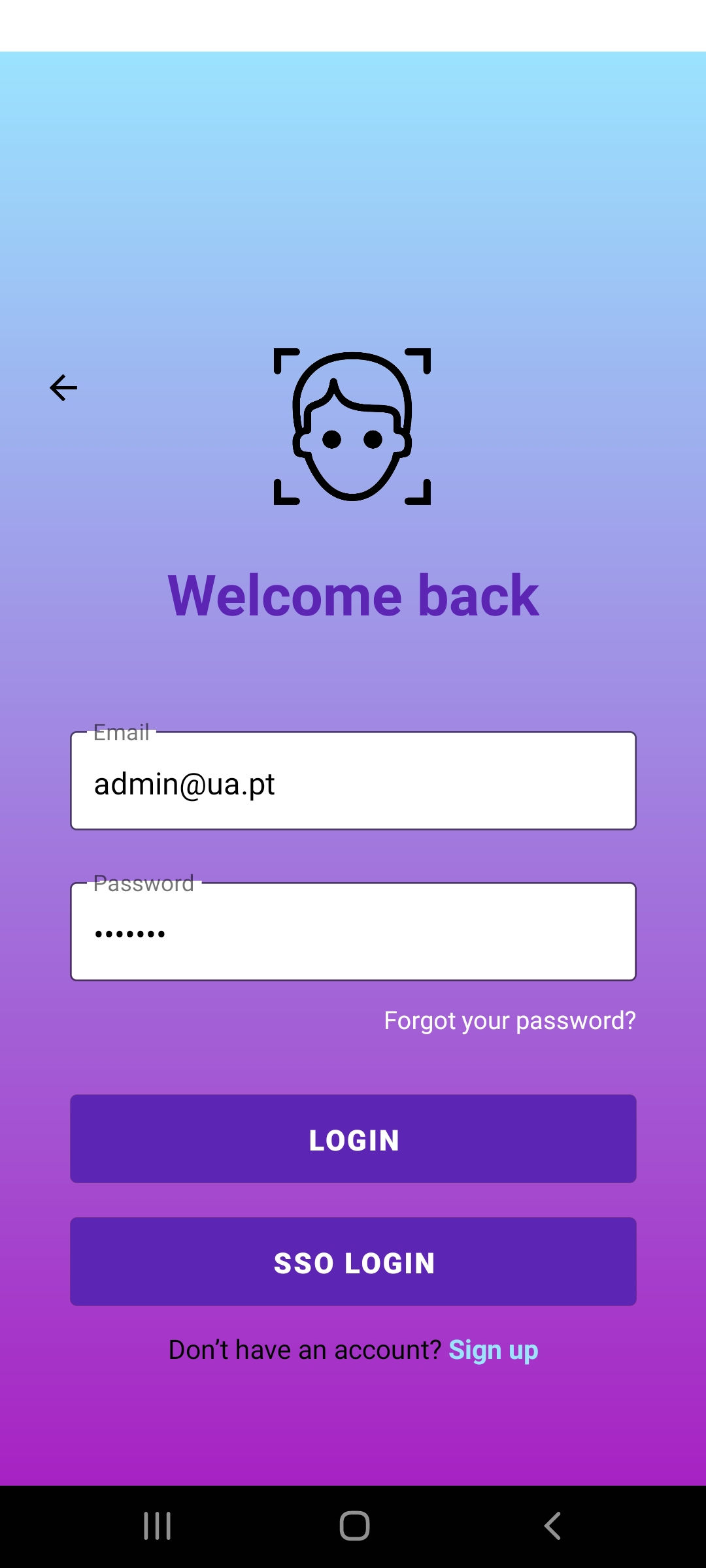

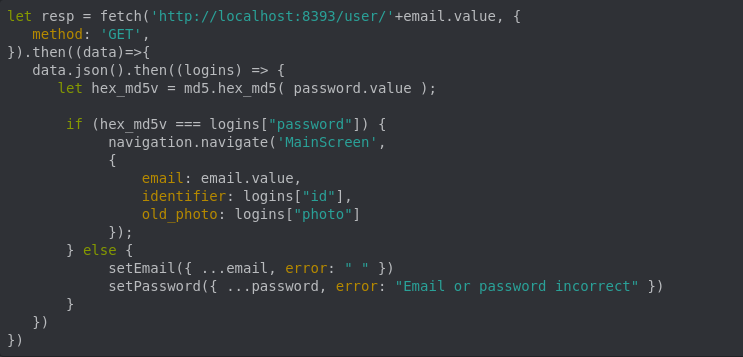

- The system must allow the user to login with username and password (or SSO)

- The system must allow the user to check his current photo

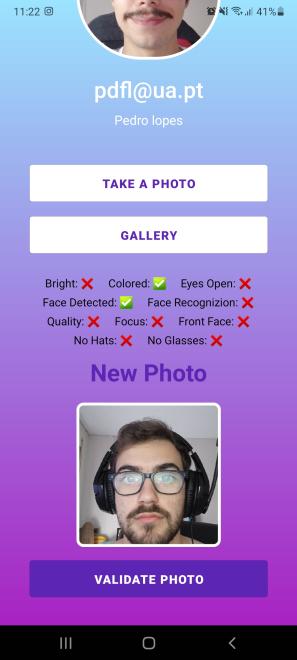

- The system must allow the user to check the properties needed for a photo to be valid

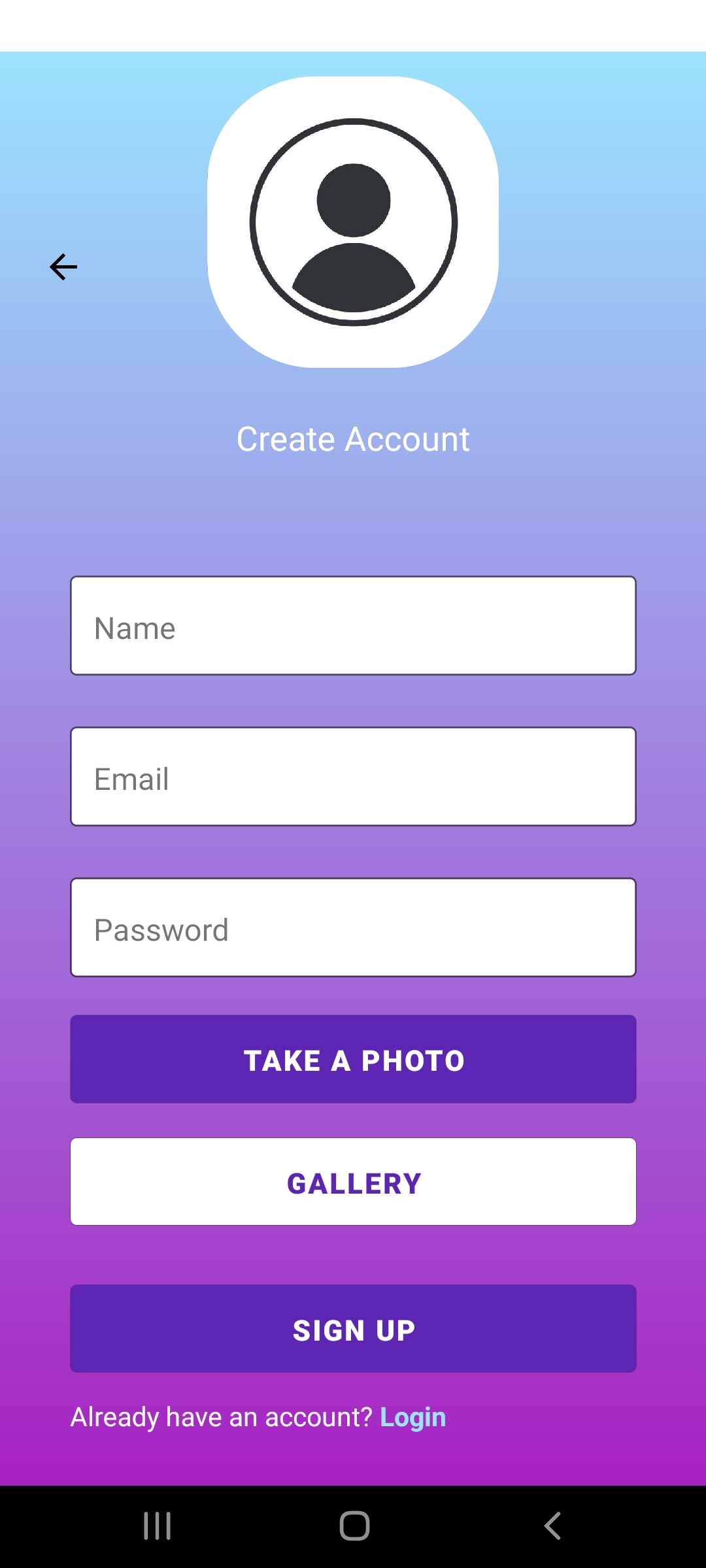

- The system must allow the user to pick between taking a live photo or choosing a photo from the gallery and do one of them

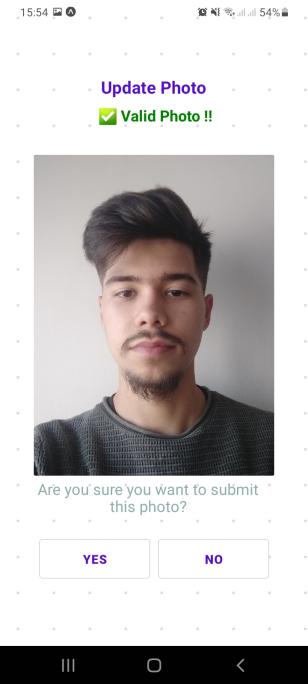

- The system must allow the user to choose between updating a valid photo, going back to the last menu or return to the main page

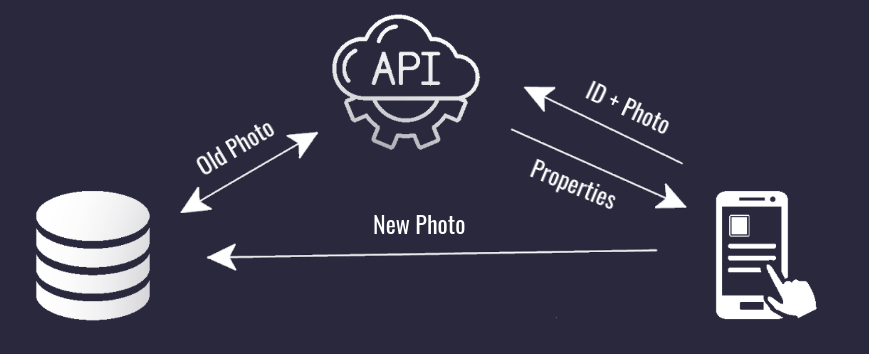

- The system must send a photo and the user ID to the FotoFaces API

- The system must receive a JSON from the FotoFaces API with the validation properties

- The system must check the validity of a photo (based on its properties)

- The system must show the user if the chosen photo is valid

Development Tools

React Native

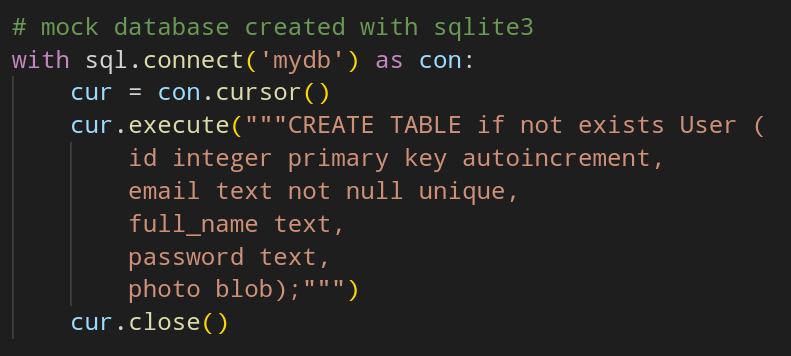

Database

Mock-Ups

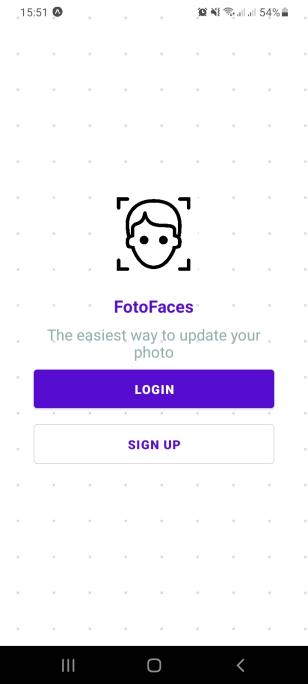

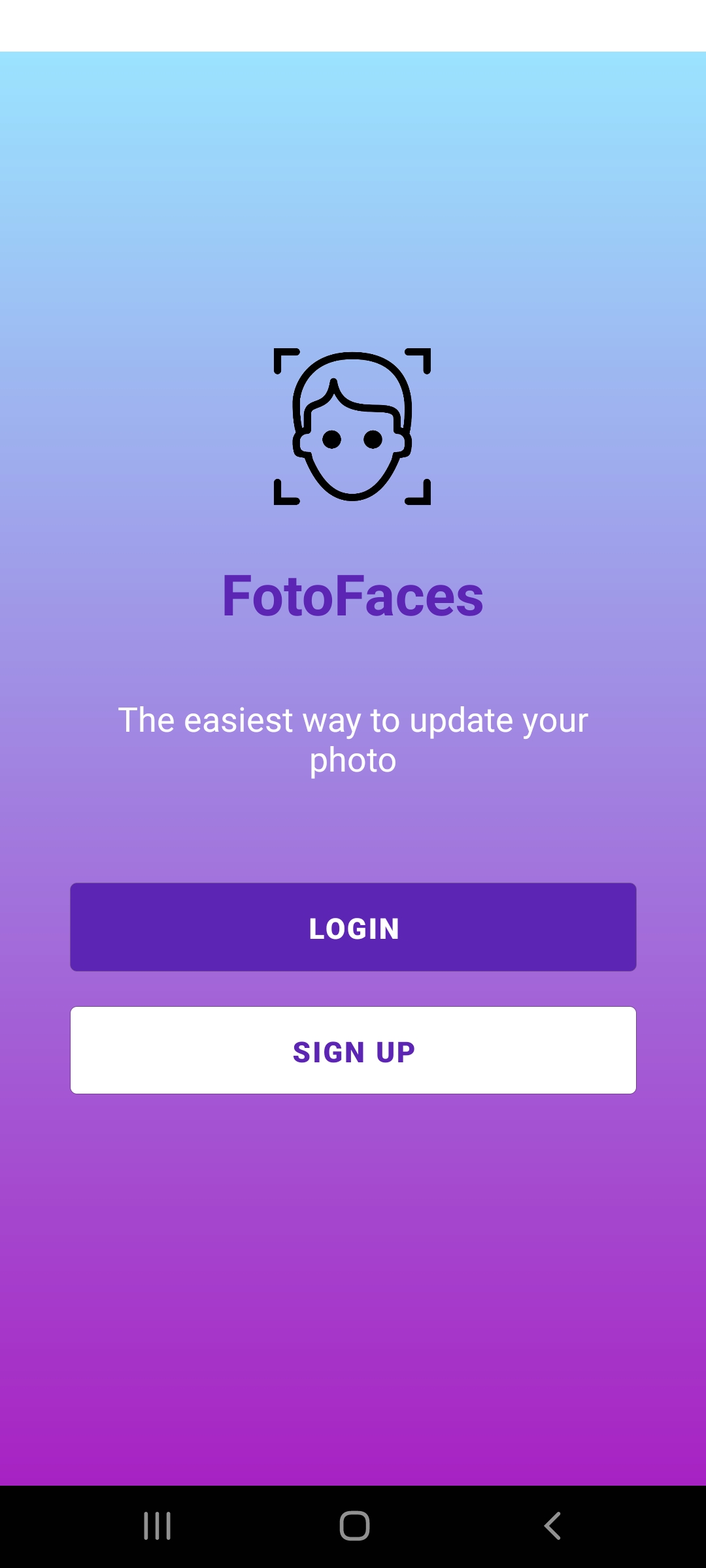

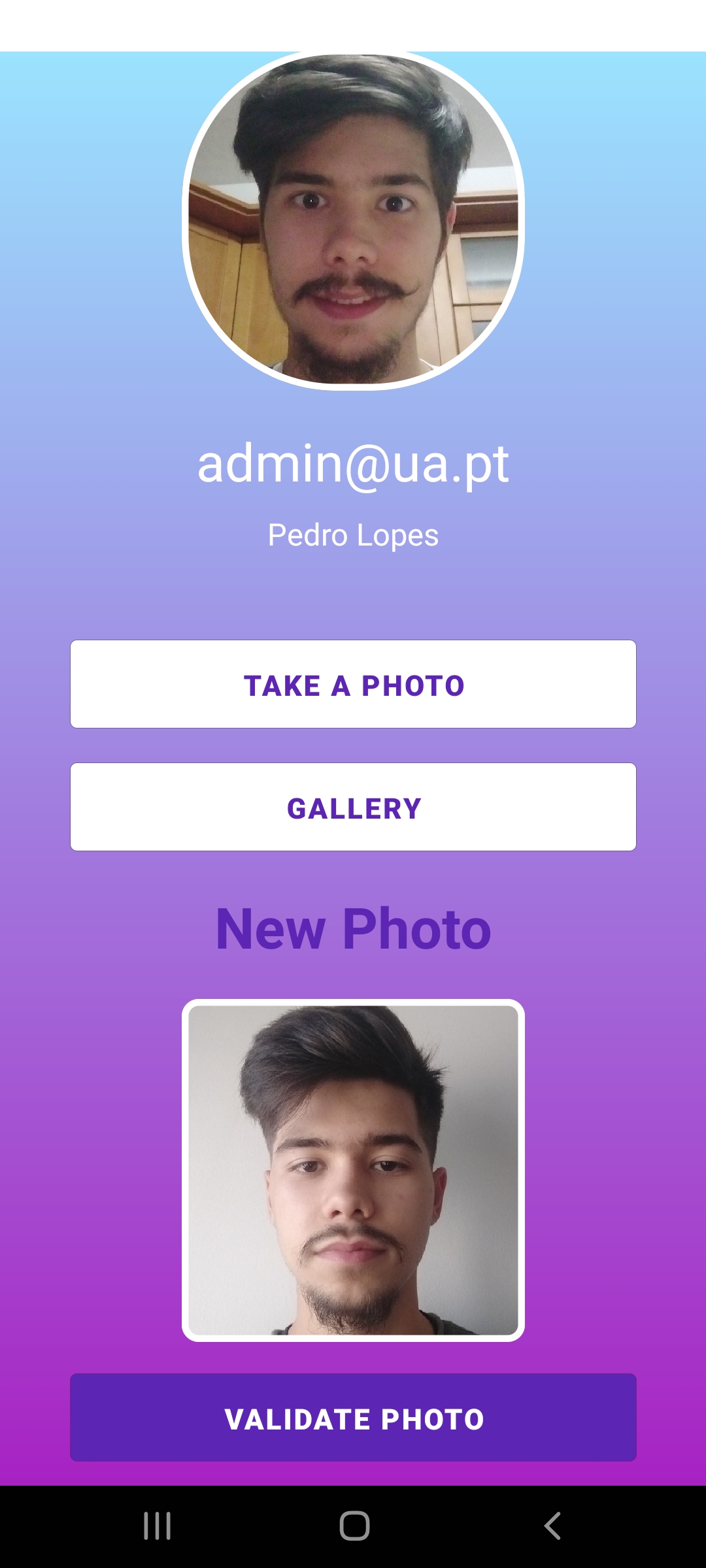

Home Page

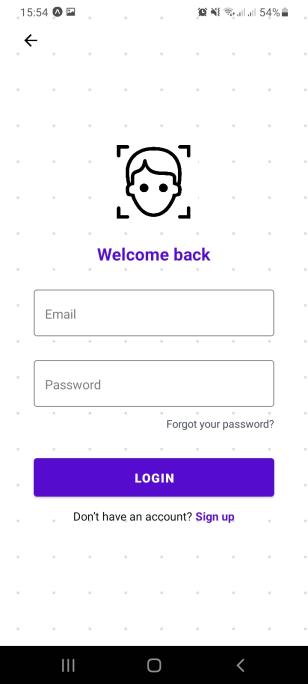

Login

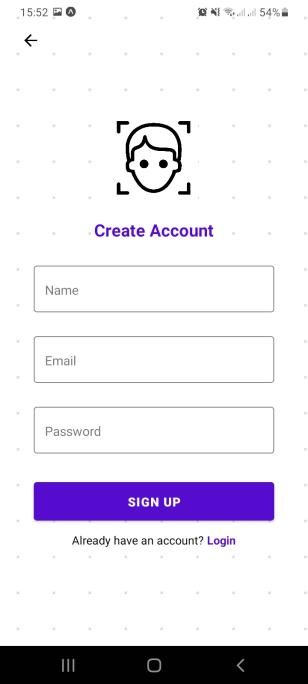

Register

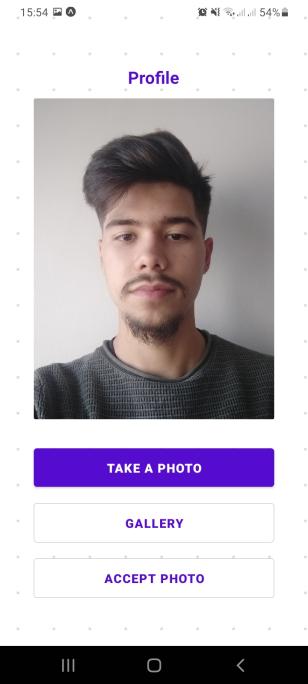

Photo Upload

Update Photo

Construction Phase

Photo

Take photo from camera roll

Choose photo from gallery

Design

Start Screen

Login Screen

Register Screen

Main Screen for Validation

Main Screen with properties

Phot Accept Screen

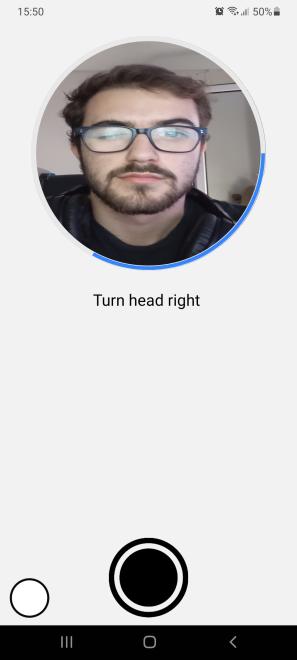

Live Detection

The Three Steps

Winking

Rotating the head

Smiling

Algorithm

The Live Detection algorithm is used when a user chooses to take a new photo in the main screen or the register screen. The camera option will take the user to an interface, based on a project done by Osama Qarem, where he has to put his face inside a frame and do some verification steps, like winking an eye.

We use an expo package called FaceDetector which uses functions of Google Mobile Vision framework to detect the faces on images and gives an array that contains information about the face, e.g. the coordinates of the center of the nose, the winking probability, etc.. The FaceDetector package is usually used along with the Camera package also from expo, where we can define the properties of the FaceDetector detection, as for example the minimum detection interval, which defines in what space of time it should return a new array of the properties of the face. By analysing that array, we can confirm if the user is smiling or not, by checking the value of the key 'smilingProbability', if the number is bigger, it means that the user is most likely smiling.

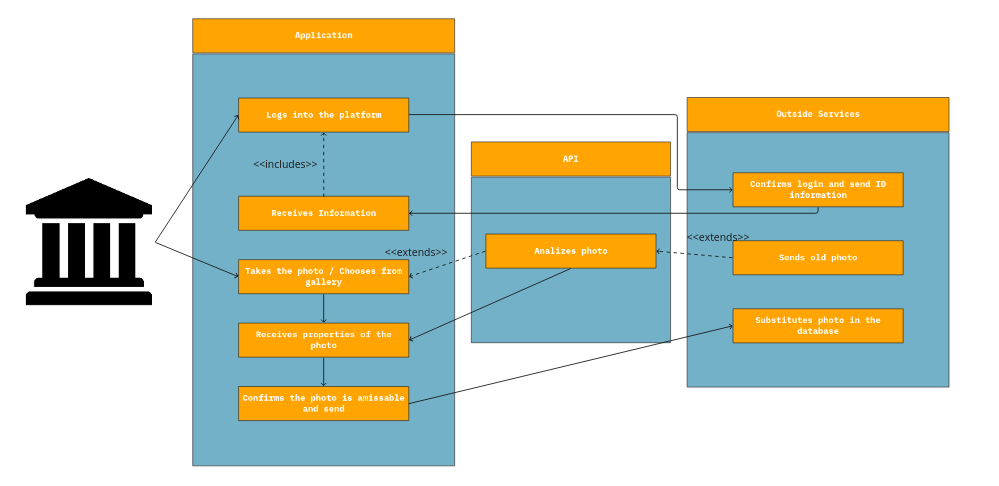

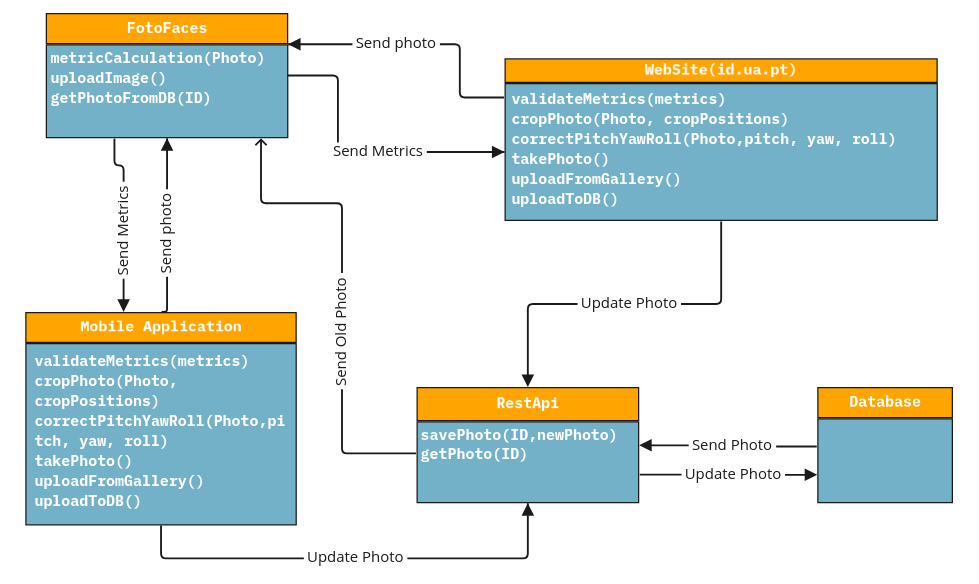

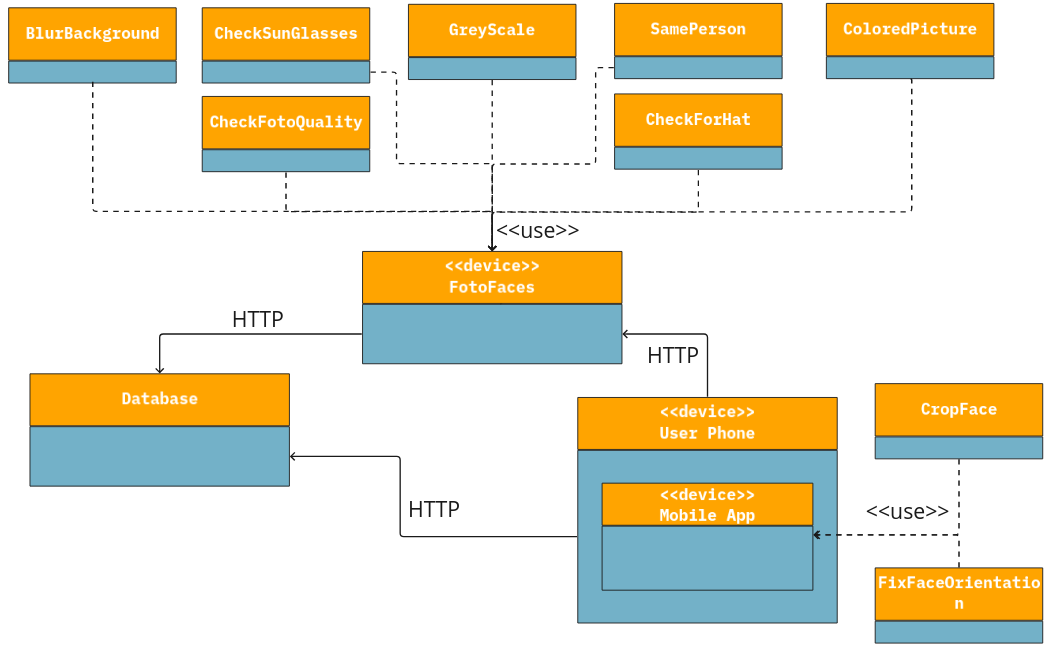

Architecture

Simple Architecture

Inception Phase

Communication Plan

Team communication

Advisor-Team discussion

Project Bakclog management

Promotional Website

Development Tools

Mobile App building

Code repository

Website building

Team communication

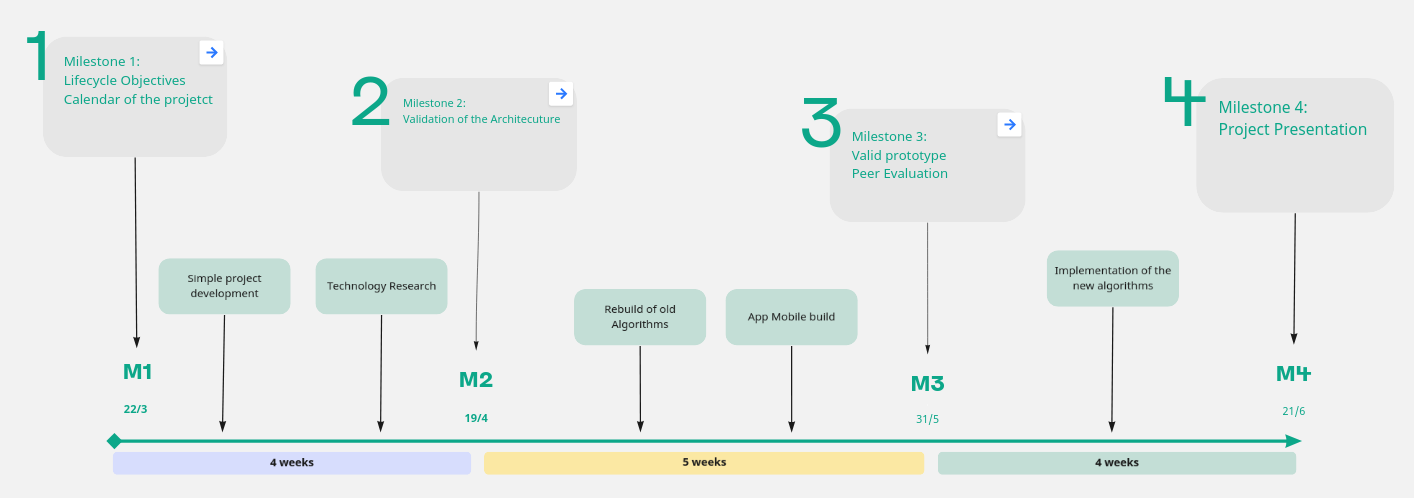

Project Calendar

Elaboration Phase

Non-Functional Requirements

- Scalability - When many users use the API at the same time

- Reliability - It shouldn’t crash all the time

- Availability - Always available to any user

- Maintainability - Maintain documentation and infrastructure

- Usability - Intuitive application

Actors

Employees

Human Resources representatives

Use Cases

User opens app, makes login, checks his or her photo, decides to take a newer photo, the app offers mechanisms to upload or to take a new photo, User waits for the response containing all the metrics of the FotoFaces then the mobile app validates those criterias and a new screen appears to the user offering them to take the red or the blue pill, while exhibiting the photo after cropping and fixing the pitch, yaw and raw.

Domain Model

Deployment Diagram

State of Art

id.ua.pt already offers services that includes a similar software than the one we are going to build

Construction Phase

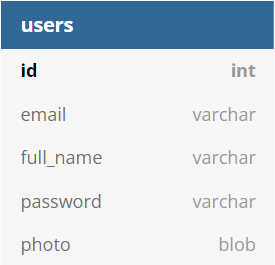

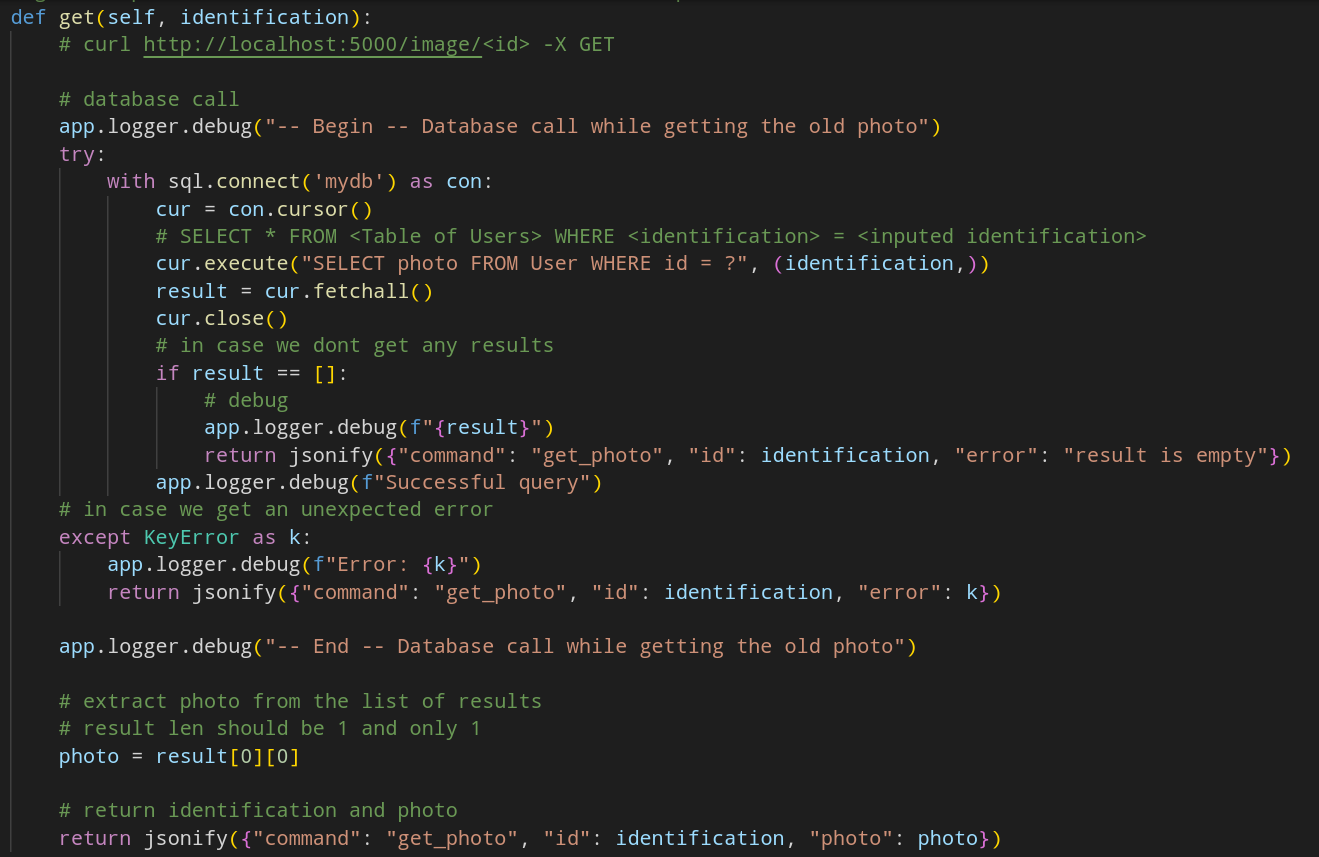

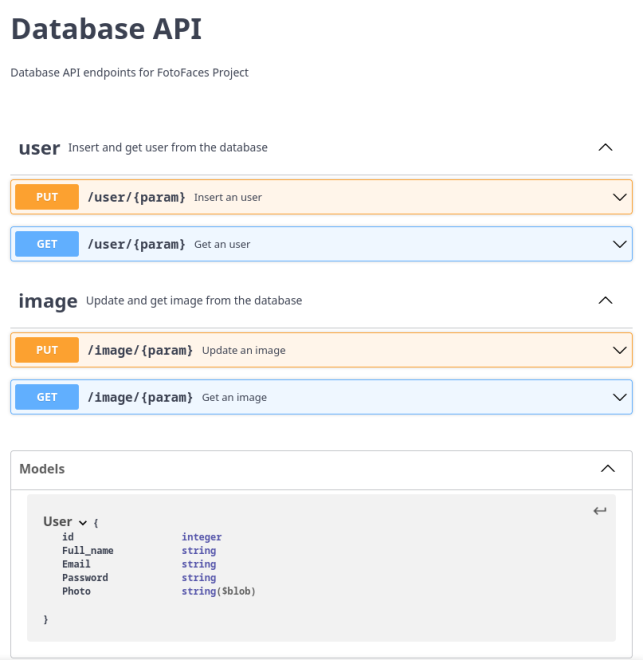

Database

Database Creation

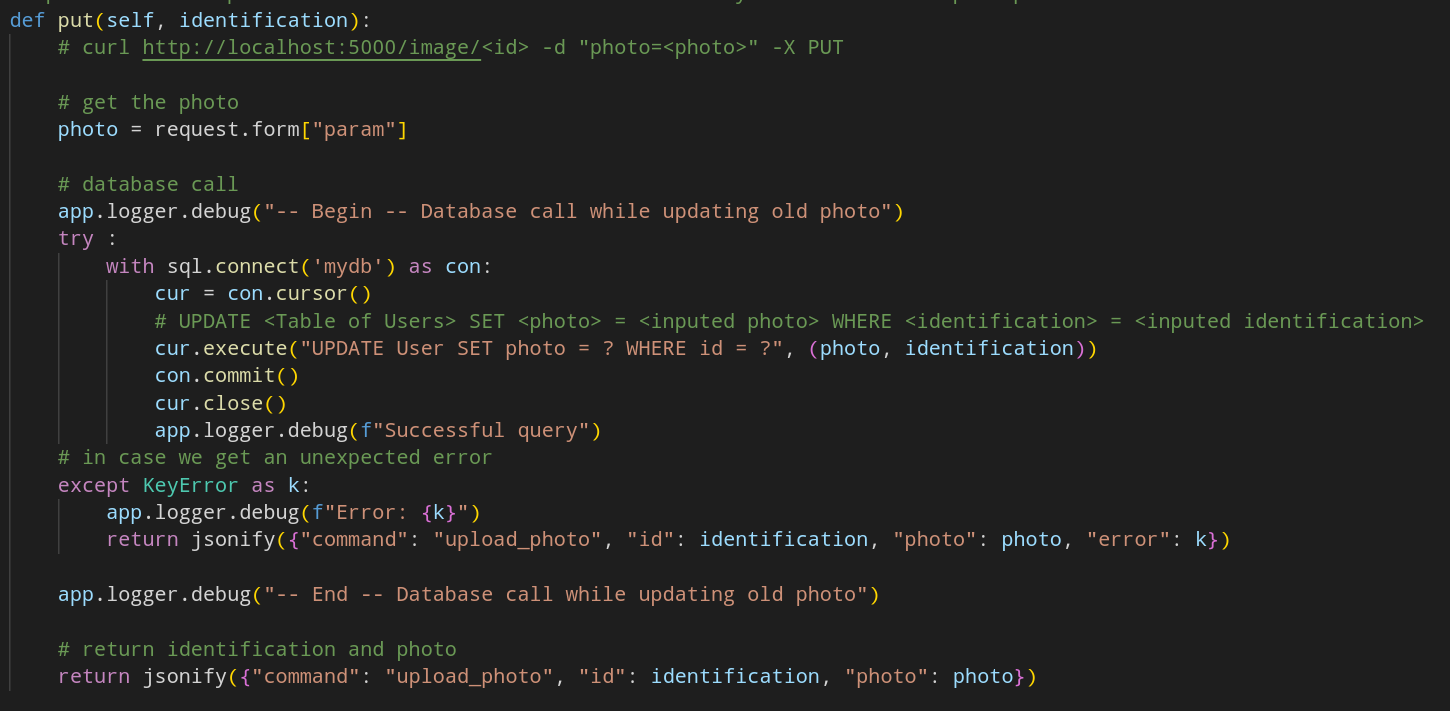

Database Update

Database Get

Database Endpoints

Message Dealing

Http message Example

Functional Requirements

- The system must be able to receive a photo and an user ID

- The system must get the user old photo from the database

- The system must compare the photos and check if it’s the same person

- The system must detect a series of properties from the new photo

- The system must send the detected properties in a JSON format to the user

- The systen must allow for plugins to be added for detection of more properties

Development Tools

Flask

OpenCV

Dlib

Algorithms

- Facial Recognition

- Face Reference Detected

- Face Orientation

- Frontal Face

- Analyse photo quality

- Analyse photo brightness

- Analyse colored Picture

- Blur background

- Resize

- Cropping

- EyesOpen

- Gaze

- Hat

- Glasses

- Sunglasses

- Implement deep learning

Properties

Returns True or False for the following:

Returns Levels for the following:

- Has a face

- Macthes old photo

- Blurred

- Cropped

- Live

- Colored

- Eyes Open

- Frontal Profile

- Hat

- Glasses

- Sunglasses

- Brightness

- Quality

Construction Phase

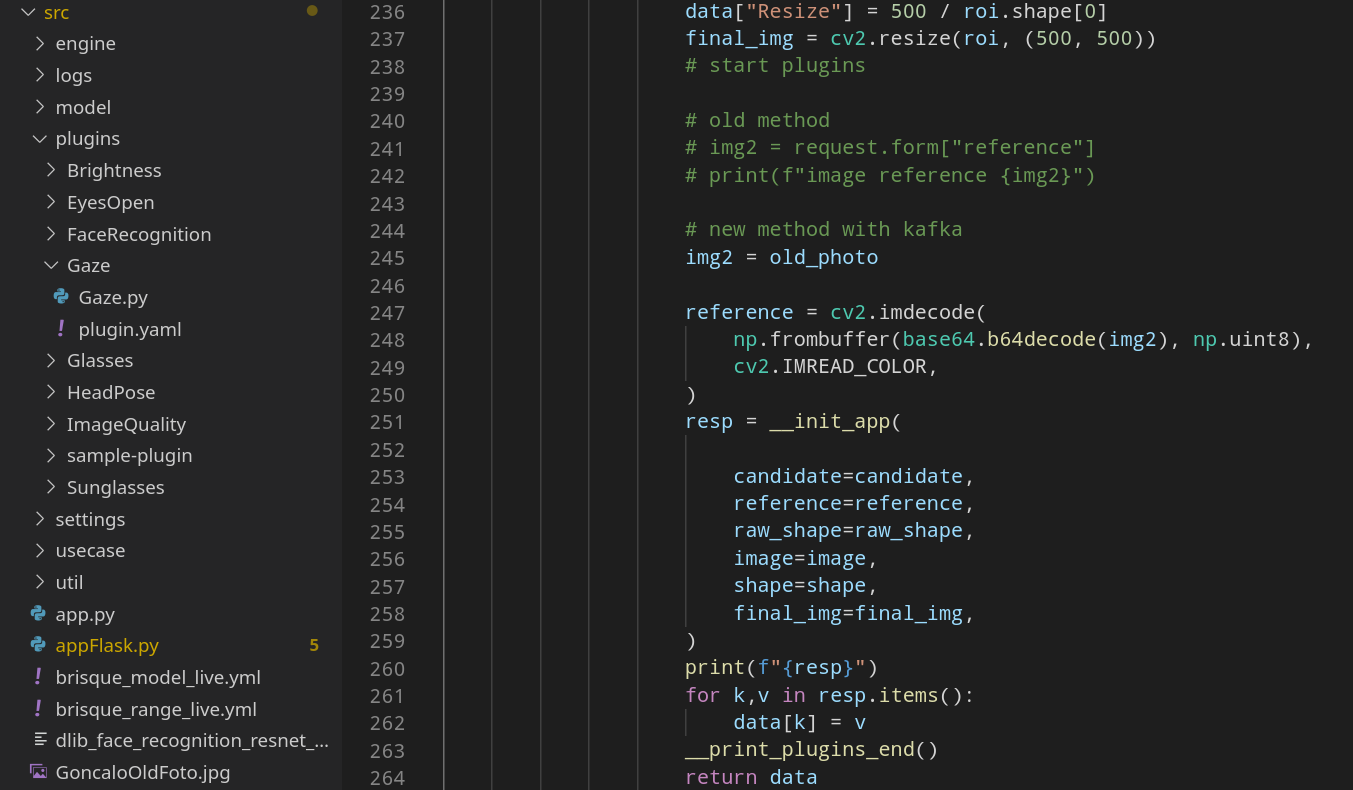

Plugin Arquitecture

Each plugin folder (like Gaze) contains a python file with the algorithm code, with useful functions if needed, and a .yaml configuration file.

The main application will gather all the plugins and run them consecutively, until there are no more to execute.

Then it will gather each algorithm result and convert it into a single json message

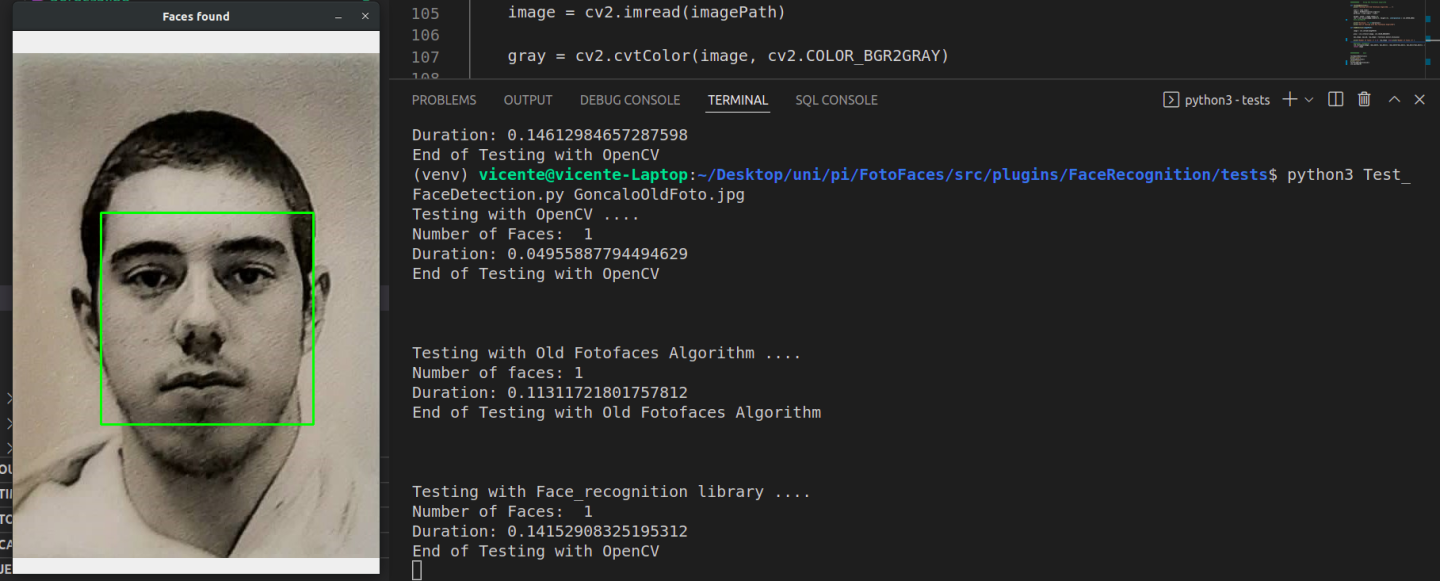

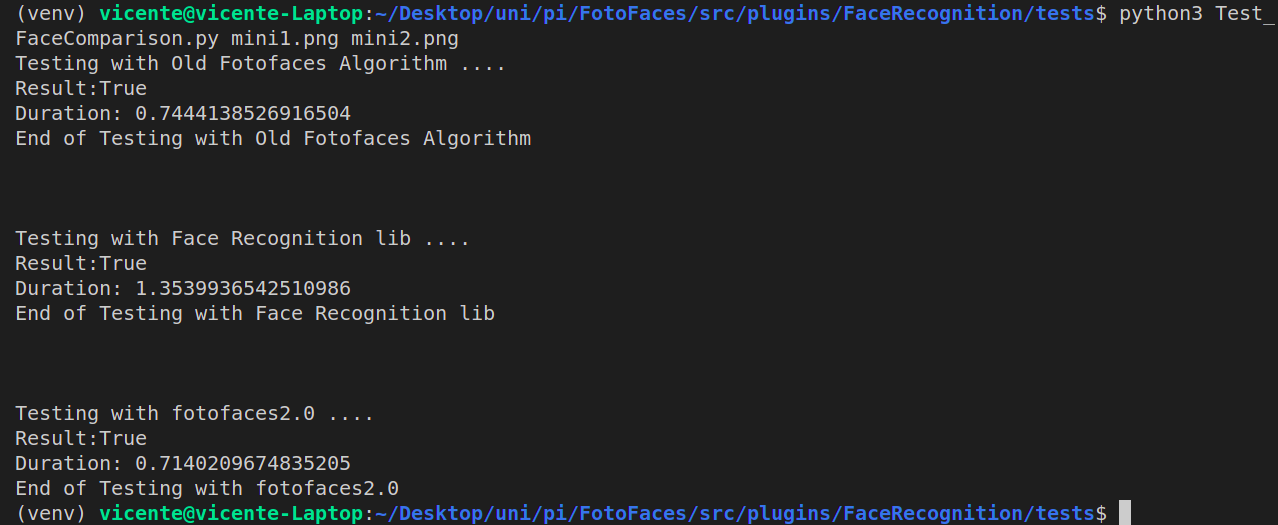

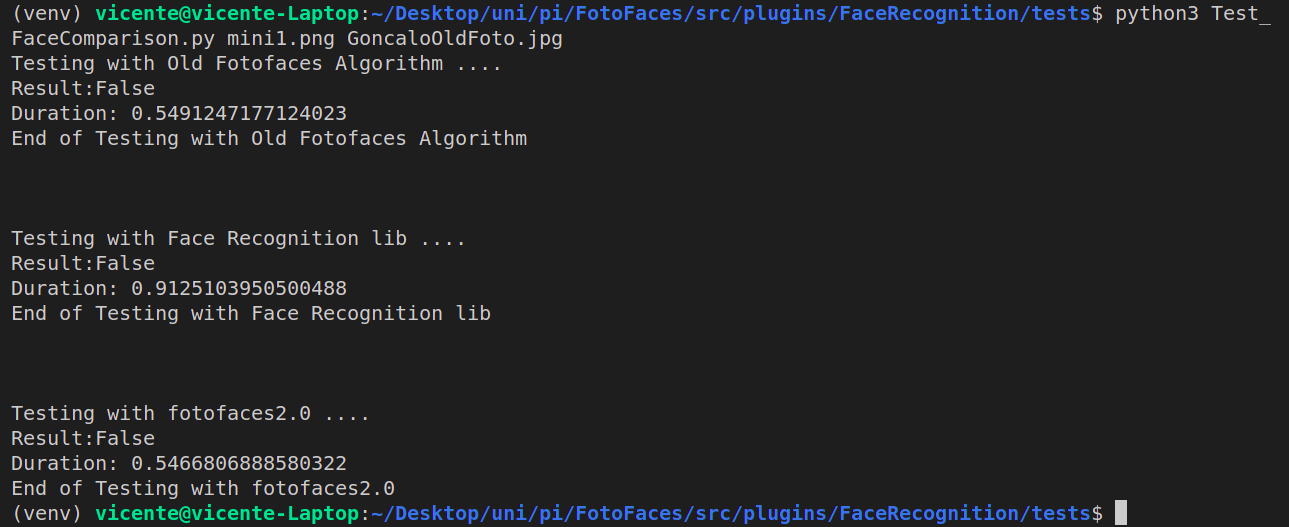

Testing

All tests are conducted to a specified algorithm

They were made to observe the time the algorithms take to execute, from both the old and new fotofaces, as well as how well do they work

Face Detection

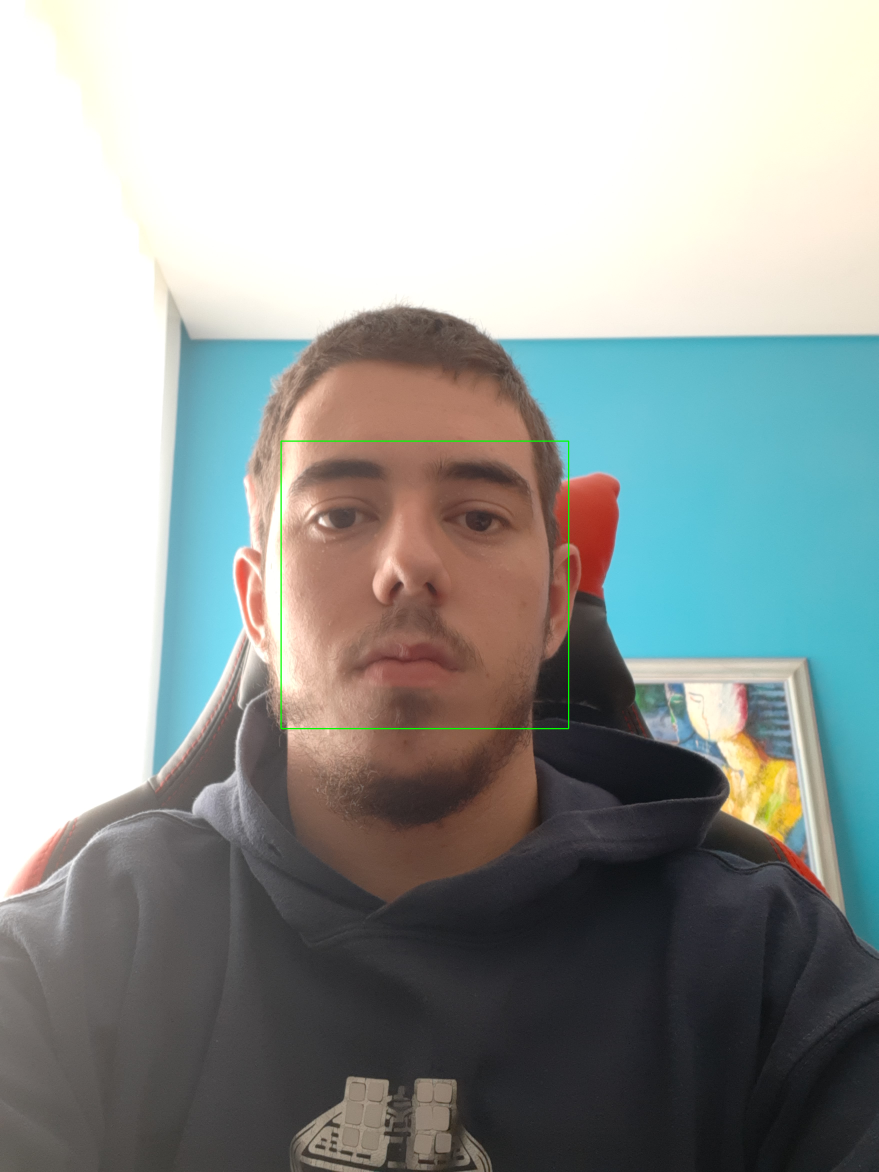

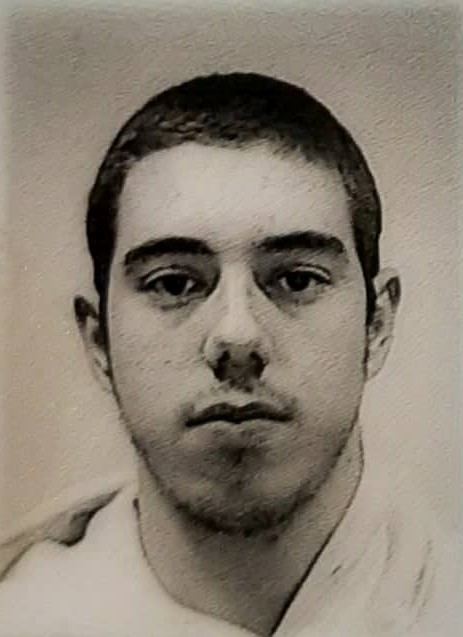

Facial Comparison

Same person = True

Different people = False

Algorithms Implemented

Face Detection

Face Recognition

Returns a shape

Returns true or false, will return true

Sunglasses Detection

Glasses Detection

Returns true or false, will return true to the left and true to the right

Returns true or false, will return true to the left and true to the right

Focus/ Gaze

Image Quality

Returns bewteen 50 and 100, the higher the better

Returns value above 0, the lower the better

Head Position

Hat Detection

Returns values above 0, the lower the better

Returns true or false, will return true to the left and true to the right

Brightness

Open Eyes

Returns value above 0, the higher the better

Returns values between 0.10 and 0.50, the higher the better

Cropping

Returns a cropped image